Machine Learning Needs a Langlands Programme

Tags: musings, research

Since I switched to the field of machine learning, I have been interested in its theoretical foundations—doing a lot of pure mathematics in before my Ph.D. (and a tiny bit during the Ph.D. as well) left its mark apparently. I noticed two things relatively quickly:

- The field is humongous and at present, it just keeps on growing1.

- While there are commonalities between different ‘branches’ of machine learning, there is also a fair amount of separation: researchers that work primarily with applications in computer vision, for example, by necessity use different architectures, learning paradigms, and theorems than those that are doing reinforcement learning.

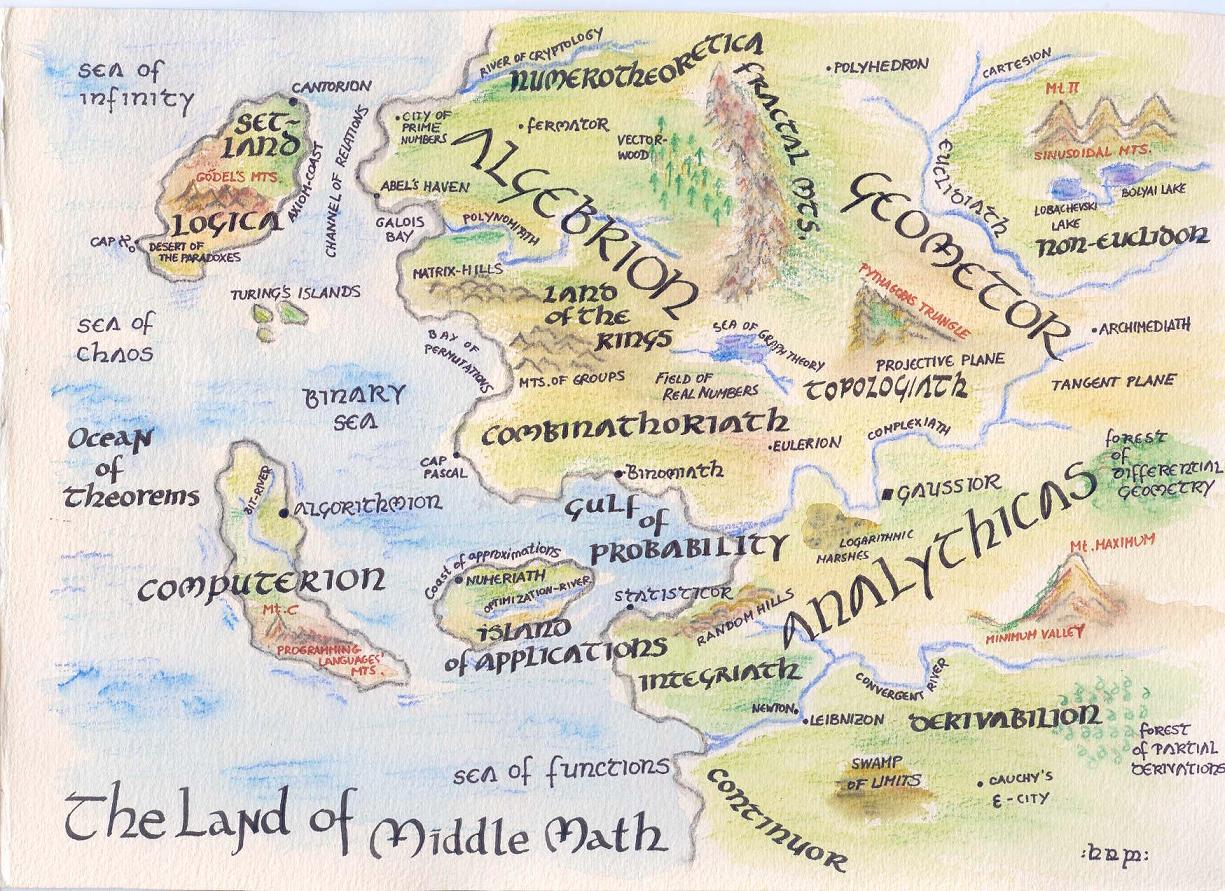

The second point should be familiar to anyone doing mathematics. The different branches use the same intellectual framework, of course, but often, it is hard to talk across the boundaries. This fact is often bemoaned and beautifully illustrated in various ‘maps of mathematics’. Here is a glorious version of the country of ‘Middle Math’ and its various provinces, domains, and fiefdoms, drawn by Prof. Dr. Franka Miriam Brückler:

Different solutions have been proposed over the years to improve this situation. One involves category theory, i.e. a way to generalise and abstract mathematical statements from their original domain such that they can be studied in a general setting2. Another one, whose scope is more restricted but whose ultimate goals are far-reaching, is the Langlands programme.

The Langlands programme. Created by Robert Langlands in the 1960s and 1970s, the Langlands programme aims to study connections between number theory and geometry. Without going too much into the details, we can think of this as a Rosetta Stone for mathematics. The individual branches of mathematics are represented as different columns on the stone. Each statement and each theorem have their counterpart in another domain. The beauty of this is that, if I have a certain problem that I cannot solve in one domain, I just translate it to another one! André Weil discussed this analogy in a letter to his sister, and his work is a fascinating example of using parts of the mathematical Rosetta Stone to prove theorems.

Empirical and theoretical machine learning. In machine learning, there appears to be rift between theoretical contributions and empirical ones. A good empirical contribution can present a new regularisation term or method, and present evidence that it prevents overfitting, for example. A large amount of knowledge is generated through these papers—and the resulting applications keep on demonstrating that this knowledge can be used in practice. Yet, this sort of ‘folk wisdom’ can be sometimes problematic and has led to less-than-favourable comparisons to alchemy3. I grant that it is somewhat dangerous to use techniques without understanding why they work. Theoretical contributions, by contrast, play catch-up most of the time. As an example, take batch normalisation4: originally published in 2015, a theoretical analysis was only published in 2018. That is not to say that researchers should wait until they have developed the theory in all details—different researchers have different personal preferences for what they want to be working on, and a good experimental setup is sufficient to demonstrate the utility of a novel technique. Yet, there is an imbalance here. As empirical papers proceed in leaps and bounds, presenting more and more different architectures, training paradigms, and so on, the fundamental questions remain unanswered.

Fundamental questions. One of the fundamental questions in machine learning, which quickly leads to additional questions, is to characterise the function learned by a neural network. Is that function part of a certain class of functions? Are there particular functions that cannot be learned? What is the capacity required to learn such a function? Can all networks of a certain type learn such a function eventually, provided they are given sufficient training examples? What type of structure in the data is used to arrive at a particular output? This list can be extended and of course, progress is made already: there are theorems for different architectures that characterise the capability of such a network to approximate any (continuous) function. For the deep sets architecture5, this is an ongoing process: the original deep sets paper handles some special cases such as countable domains, whereas a recent paper discusses the limitations of set functions by providing necessary conditions for universal functions approximation.

Missing links. What is missing in both of these papers, however, is a connection to other architectures. The classical way of writing a machine learning paper is to present a novel solution to a specific problem. We want to say ‘Look, we are able to do things now that we could not do before!’, such as the aforementioned learning on sets. This is highly relevant, but we must not forget that we should also look at how our novel approach is connected to the field. Does it maybe permit generalising statements? Does it shed some light on a problem that was poorly understood before? If we never explore the links, we risk making ourselves into toolmakers with too many bits and pieces. Looking for the general instead of the specific is the key to avoid this—and this is why machine learning needs its own version of the Langlands programme. It does not have to be so ambitious or far-reaching, but it should be a motivation for us to investigate outside our respective niche.

An example. A beautiful example of deep connections in deep learning is given by a recent paper on the Tropical Geometry of Deep Neural Networks. Here, the authors show how to represent a simple deep learning architecture by means of tropical polynomials6. The spirit of the Langlands programme is seen in statements such as the following:

We will show that the decision boundary of a neural network is a subset of a tropical hypersurface of a corresponding tropical polynomial […]

In a recent preprint, this work is extended and explicit representations of the decision surface are being calculated. This is exciting and useful because once such a mapping is in place, different strategies for training the network can be applied and the resulting decision surfaces can be compared by comparing their representations! I am convinced that machine learning needs more connections like this; having access to representations that can be easily manipulated would be very powerful and be an incubator for further success in the field.

What now? Thus, I end my proposal about a Langlands programme for machine learning. If people are interested in this, we should think about what kind of conjectures and representations we need. Personally, I think that one step would be to understand and revisit the manifold hypothesis of our field. Here are some questions that come to mind:

- What types of manifolds can we expect to obtain when we are dealing with real-world data?

- When does this hypothesis fail?

- What would be the consequences of such a failure?

There are many more questions like this and not enough answers, but I am convinced that our understanding will improve as the field matures.

I hope you enjoyed these analogies and can draw some inspiration from them!

-

I will set aside the discussion of an impending ‘AI Winter’ for now because I am not qualified to comment on it. ↩︎

-

Being no category theorist myself, this definition does not do the field justice. Please do yourself a favour and look at the beautiful and instructive articles written by Tai-Danae Bradley to get a better idea of what this all is about. ↩︎

-

Again, I am not qualified to comment on this—I know that my particular niche of machine learning is using the scientific method to advance, and leave it to the giants to battle out whether alchemy is an appropriate term for the whole field. ↩︎

-

A way to improve the training process of a neural network by making the distribution of inputs to its layers more stable. This is typically achieved by controlling the mean and variance of the distribution. ↩︎

-

A specific neural network architecture that permits learning sets, i.e. data without a natural ordering and potentially varying sizes. ↩︎

-

See here for a beautiful introduction to tropical geometry if, like me, you are not familiar with it. ↩︎